Zusammenfassung

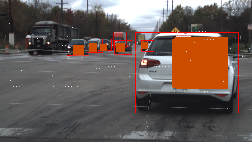

In this paper we present a sensor fusion framework for the detection and classification of objects in autonomous driving applications. The presented method uses a state-of-the-art convolutional neural network (CNN) to detect and classify object from RGB images. The 2D bounding boxes calculated by the CNN are fused with the 3D point cloud measured by Lidar sensors. An accurate sensor cross-calibration is used to map the Lidar points into the image, where they are assigned to the 2D bounding boxes. A one-dimensional K-means algorithm is applied to separate object points from foreground and background and to calculated accurate 3D centroids for all detected objects. The proposed algorithm is tested based on real world data and shows a stable and reliable object detection and centroid estimation in different kind of situations.